Idea to Solution in 30 Minutes

The great thing about experimenting with AI is that it’s okay if it fails. By trade, I’m a designer, so not every problem that comes my way requires me to dive in and engineer a solution. But sometimes? Sometimes it’s just fun to try—especially when the consequences are low.

Those consequences can ramp up quickly, though, if you’re devoting a lot of time to it. So the goal here was speed. The idea is obvious, but how quickly can I do it?

Turns out: about 30 minutes flat.

The Problem Statement

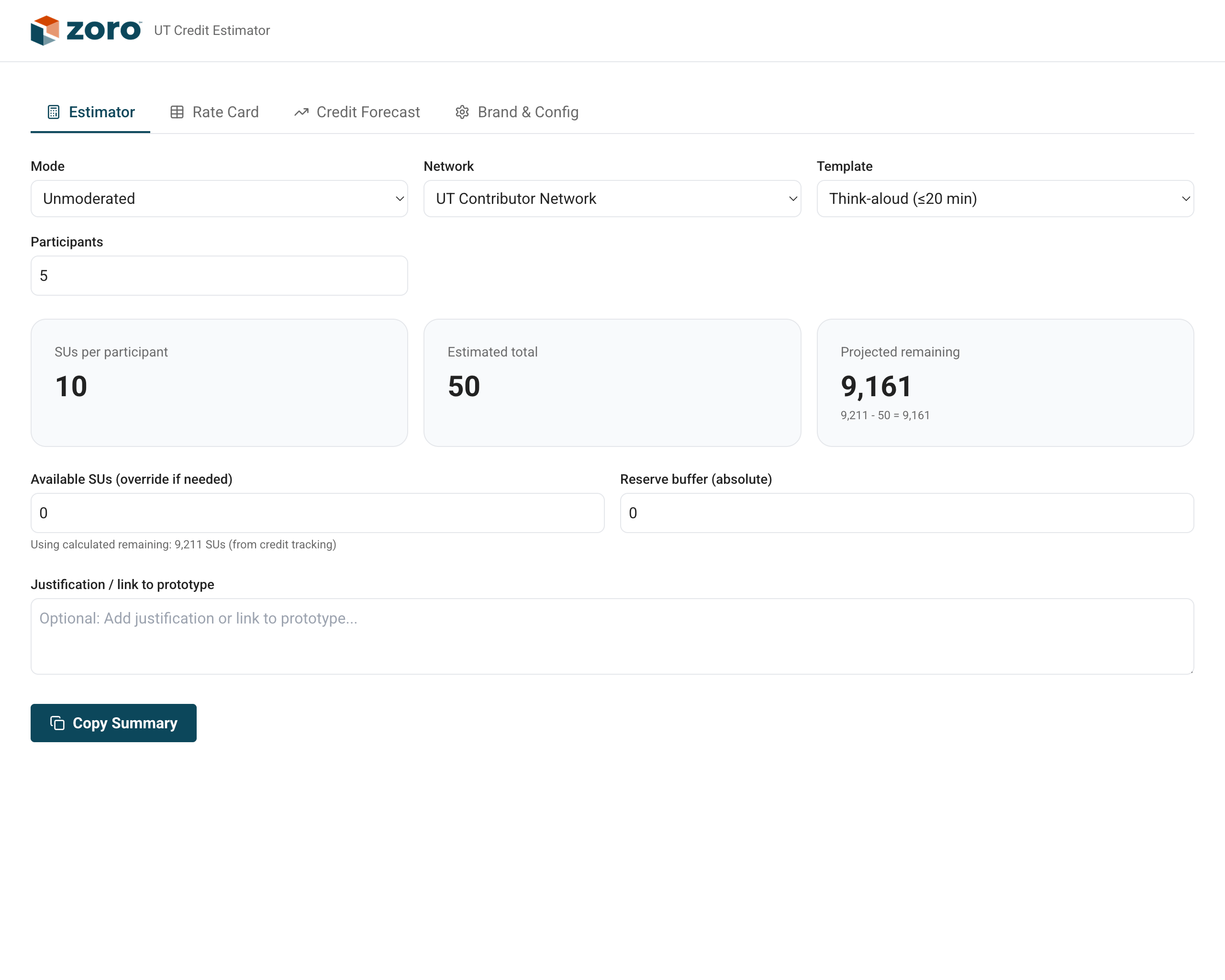

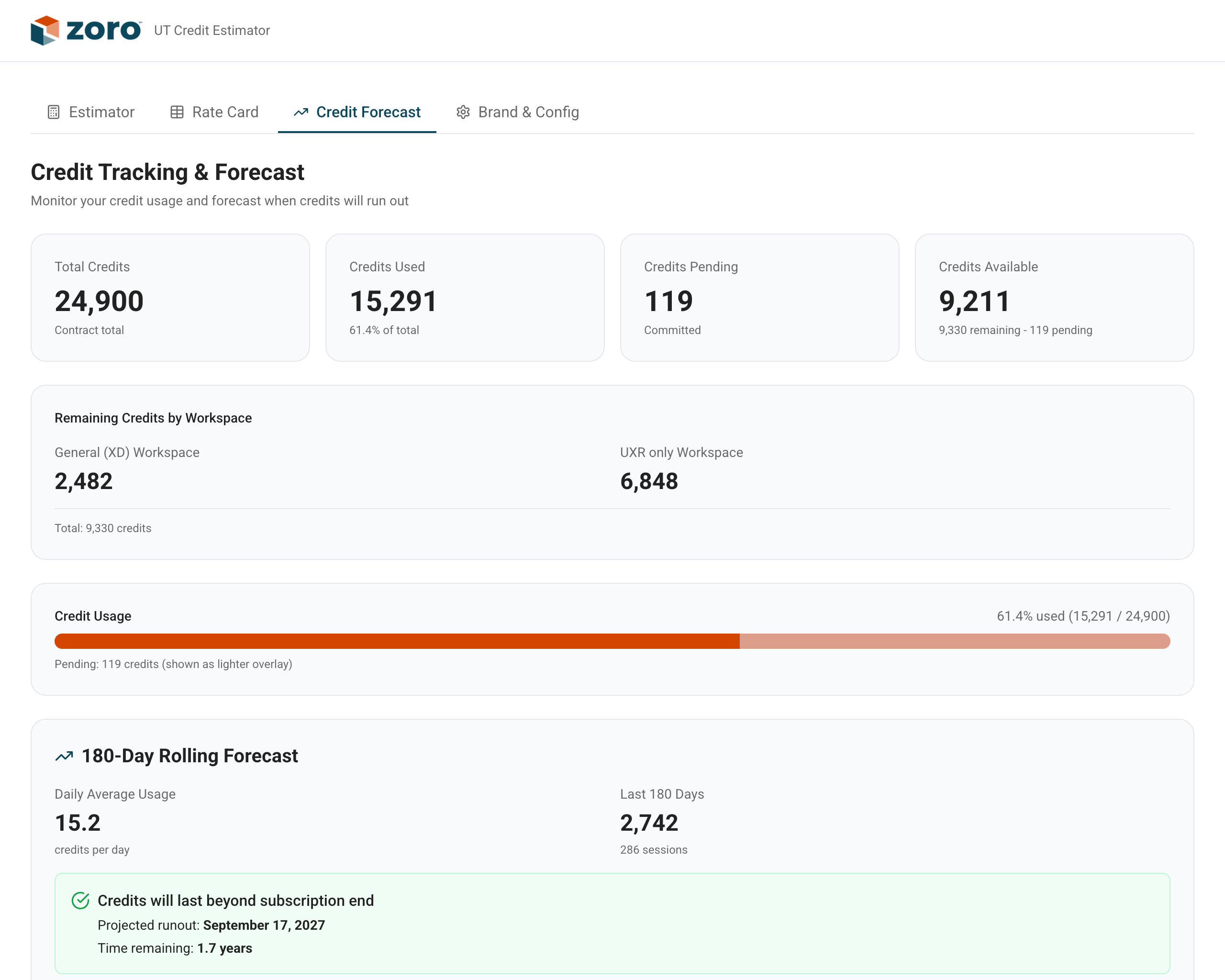

Recently, during a meeting, we were told our User Testing credits were projected to run out before the end of our contract. That would mean no more user tests without prior approval and justification. That approval process was simple enough: Slack the Research Manager and ask. Do your best to estimate the credits you’ll use.

For starters, there was no official form. Just a Slack message you hoped a busy person would see and respond to. On top of that, credit usage was intentionally opaque. You don’t know how many credits a test will use until after you run it.

We also didn’t have a clear picture of how many credits we had left week to week. Credit usage and remaining balance live behind an admin account, and not everyone has admin access.

The Idea

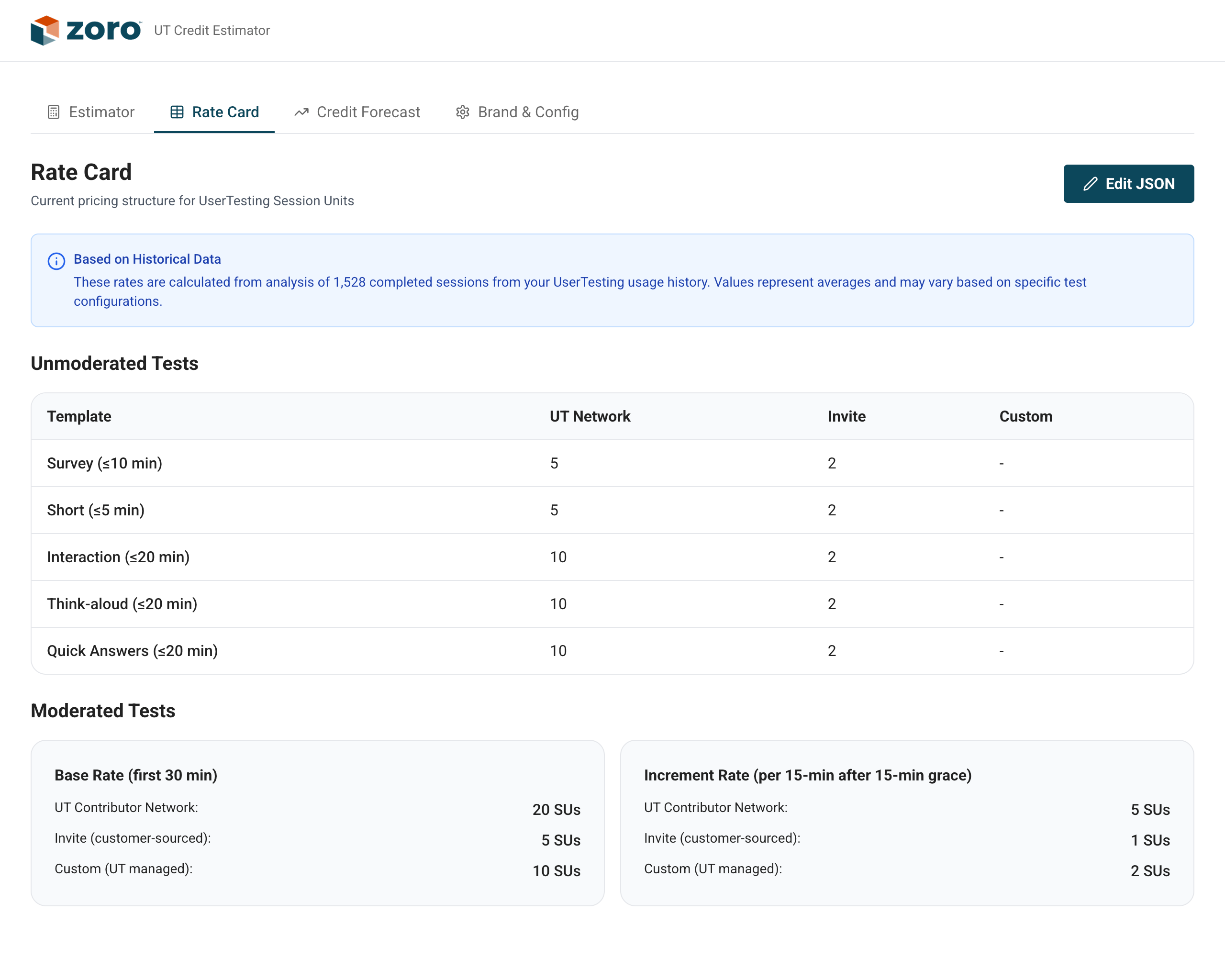

I was confident that, with the help of some robotic research, I could figure this out. I had access to all of our previous tests and their credit usage, including detailed breakdowns. I also knew our remaining balance from a deck that had been shared earlier.

I immediately knew what was possible. Just not how long it would take.

If I fed all our test usage data into ChatGPT, could it analyze the data and give me a clear picture of each test type, its duration, and how many credits it used? Yes. Very easily, as it turns out.

Could I use that information to build an estimator?

Could that estimator include a form to request new tests?

And could it track our running balance so we weren’t flying blind?

The idea is obvious, but how quickly can I do it?

Quote

It was 4:30 on a Tuesday. I had about 30 minutes to find out. Any more than that, and it would start interfering with actual work.

Fuck it. Let’s go.

The Results

I don’t have to tell you that AI is great at automating tedious work, especially scanning large tables, databases, and documents. When I fed in the credit usage CSV, I had answers and a working estimation model in about 45 seconds.

From there, I asked ChatGPT how to build the solution.

I told it what the tool needed to do, the general rules, the build stack, where it would live internally, and asked it to generate the documents I’d need to build it in Cursor. That included a prompt I could feed directly into Cursor to generate the app (this was before Cursor had Plan Mode).

Because this was an internal proof of concept, I didn’t spend much time designing it. I wasn’t worried about branding or perfect UX. I just wanted to see if it worked. The goal was proof, not polish. If it worked, I could get permission to build it properly and set it free.

Here’s the wild part: it was done and working in under 14 minutes.

That left me with enough time to clean up the UI a bit and apply our brand colors. Is it exactly how I would have designed it? No. Was it terrible? Also no. For an internal tool used by maybe 12 people, it was more than good enough.

The Wrap Up

I started at 4:30 p.m. with a simple goal: gauge the level of effort.

By 4:50 p.m., I was back on the phone with the manager, walking through a finished solution. That 30-minute window included the presentation. How crazy is that?

The goal was a proof of concept. I got a finished product.

Quote

I set out to build a simple proof of concept, or at least confirm that the idea was viable. What I ended up with was a fully working tool, complete with documented data sources and transparent calculations for how estimates were generated. All that was left was spinning up a place to host it internally.

There was one caveat worth mentioning: there’s no way to connect directly to our User Testing account. No API. So once a week, someone uploads a fresh usage CSV and plugs in the current balance. From there, it just works.

The next step is to see how far we can push this. Could we automate the process of getting usage data and balance, and uploading it into the database? I bet an agent could do that.

No API? Maybe that’s not a problem after all.